Artificial intelligence continues to evolve rapidly, and one of the most exciting developments is the rise of Vision-Language Models (VLMs) — systems that can interpret and describe the world visually and linguistically. Unlike traditional AI vision systems that rely solely on image data or text-based models that process language, VLMs combine both. They understand what they see and can explain it in natural language, opening up new dimensions for automation, analysis, and collaboration. In a recent post, we explained why traditional machine vision systems that combine rule-based logic with deep learning still outperform VLMs for precision-critical inspection tasks. But that doesn’t mean VLMs lack potential. In fact, they’re beginning to carve out valuable roles where contextual understanding and semantic reasoning matter more than micron-level repeatability.

1. Smarter Visual Understanding#

Traditional machine vision systems detect patterns and classify images based on pre-defined rules or deep-learning models trained on specific datasets. VLMs, on the other hand, can generalize much better. By connecting visual cues with language concepts, they can recognize unfamiliar objects, describe scenes, and even reason about relationships — all without needing explicit retraining. This progress is transforming areas such as inspection, robotics, and industrial analytics, where machines can start making context-aware decisions rather than following rigid criteria.

2. Industrial Quality Inspection#

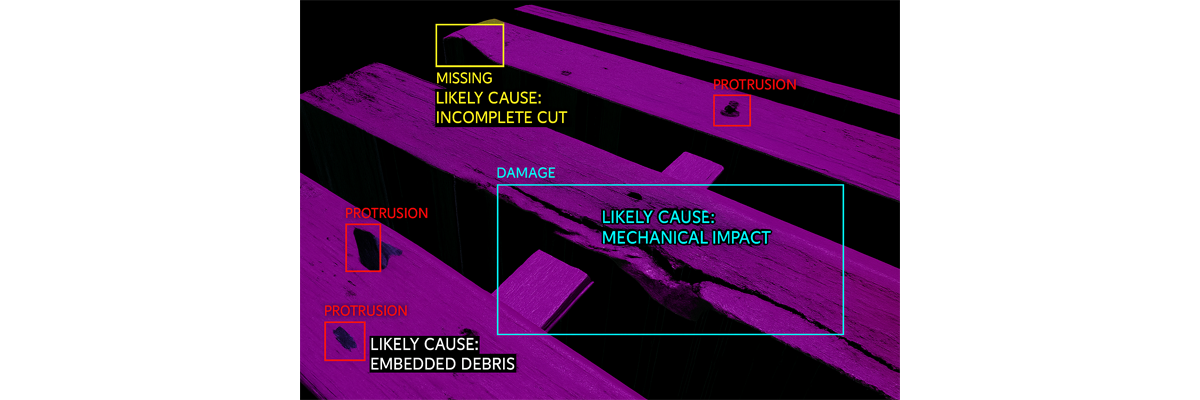

In manufacturing, especially in complex inspection environments like electronics, automotive, or medical devices, VLMs are being tested to complement traditional AI vision systems.While expert rule-based and deep-learning systems excel at detecting known defects, VLMs can interpret novel anomalies and describe them semantically, such as “scratched surface near connector pin” or “missing label on left side.”This hybrid capability could significantly reduce the time engineers spend annotating, classifying, and retraining models.

3. Robotics and Human-Machine Collaboration#

In robotics, VLMs are making progress by allowing machines to understand and respond to verbal instructions tied to visual context. For example, instead of hardcoding task sequences, a technician could say:“Pick up the blue component beside the red bin and place it in the inspection tray.”The robot, powered by a VLM, could parse that request and execute it safely and efficiently.This level of multimodal reasoning could accelerate deployment in assembly, inspection, and warehouse operations.

4. Technical Documentation and Process Training#

Another promising use case is automated reporting and training. VLMs can generate textual summaries from visual data — for instance, describing the outcome of an inspection cycle or creating human-readable maintenance logs.In quality assurance, this means moving closer to self-documenting systems, where every step of a process is captured, analyzed, and summarized in real time.

5. Limitations and Outlook#

Despite the promise, VLMs are still in early adoption phases for industrial use. Their response latency, data security, and interpretability must improve before they can replace or fully integrate with mission-critical inspection systems.However, progress is steady, and hybrid models — where traditional machine vision handles real-time detection and VLMs manage contextual reasoning — are likely to define the next generation of smart inspection platforms.

Conclusion#

VLMs are redefining how machines perceive and describe the world. For manufacturing and automation companies, they offer a glimpse into a future where inspection systems not only detect a defect but also explain it, in plain language. As AI continues to evolve, this fusion of sight and language will be a cornerstone of more transparent, intelligent, and human-aligned automation. At EZ Automation Systems, we view VLMs as a potential next layer in intelligent inspection — a natural progression from today’s expert and deep-learning systems toward explainable AI that can communicate findings more intuitively.